I'm a tenure-track associate professor at Shanghai Jiao Tong University (SJTU), the PI of the RHOS Lab. I study Physical Reasoning, Embodied AI, and Human Activity Understanding. We are building a reasoning-driven system that enables intelligent agents to perceive, reason, and interact with the physical world. Our open-source projects have garnered over 13,000 stars on GitHub.

I received the ICRA 2025 Best Paper Award (HRI, sole corresponding author), AI100 Young Pioneers (MIT review), Baidu Scholarship, WAIC Yunfan Award (twice), Shanghai Overseas High-Level Talent, Wu Wenjun AI Science and Technology Award for Excellent Doctoral Dissertation, Outstanding Reviewer of NeurIPS’20/21. I serve as the Area Chair for NeurIPS’24, NeurIPS’25, ICLR'26, a lecturer for the "Computer Vision" course at the ACM Honor Class of SJTU, VALSE EACC, and Deputy Secretary-General of the EAI Committee under the Chinese Association for AI.

Before joining SJTU, I worked closely with IEEE Fellow Prof. Chi Keung Tang and Yu-Wing Tai at the Hong Kong University of Science and Technology (HKUST) (2021-2022). I received a Ph.D. degree (2017-2021) in Computer Science from Shanghai Jiao Tong University (SJTU), under the supervision of Prof. Cewu Lu. Prior to that, I worked and studied at the Institute of Automation, Chinese Academy of Sciences (CASIA) (2014-2017) under the supervision of Prof. Yiping Yang and A/Prof. Yinghao Cai.

Research interests: Human-Robot-Scene

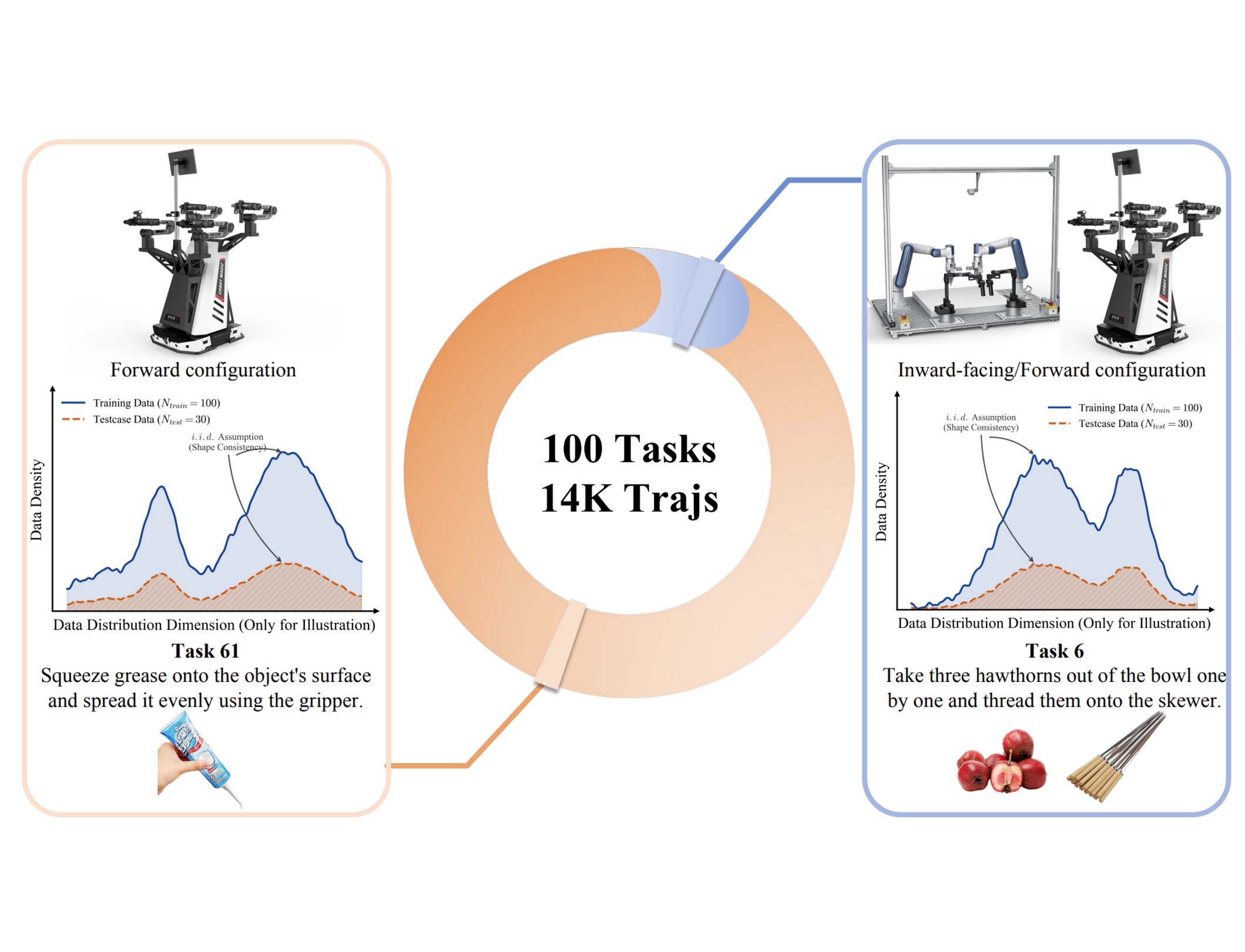

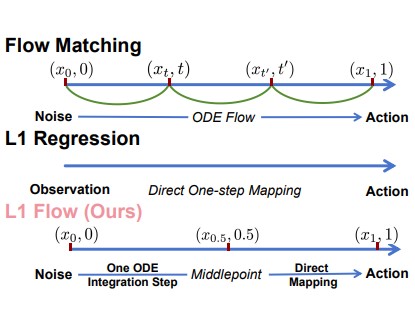

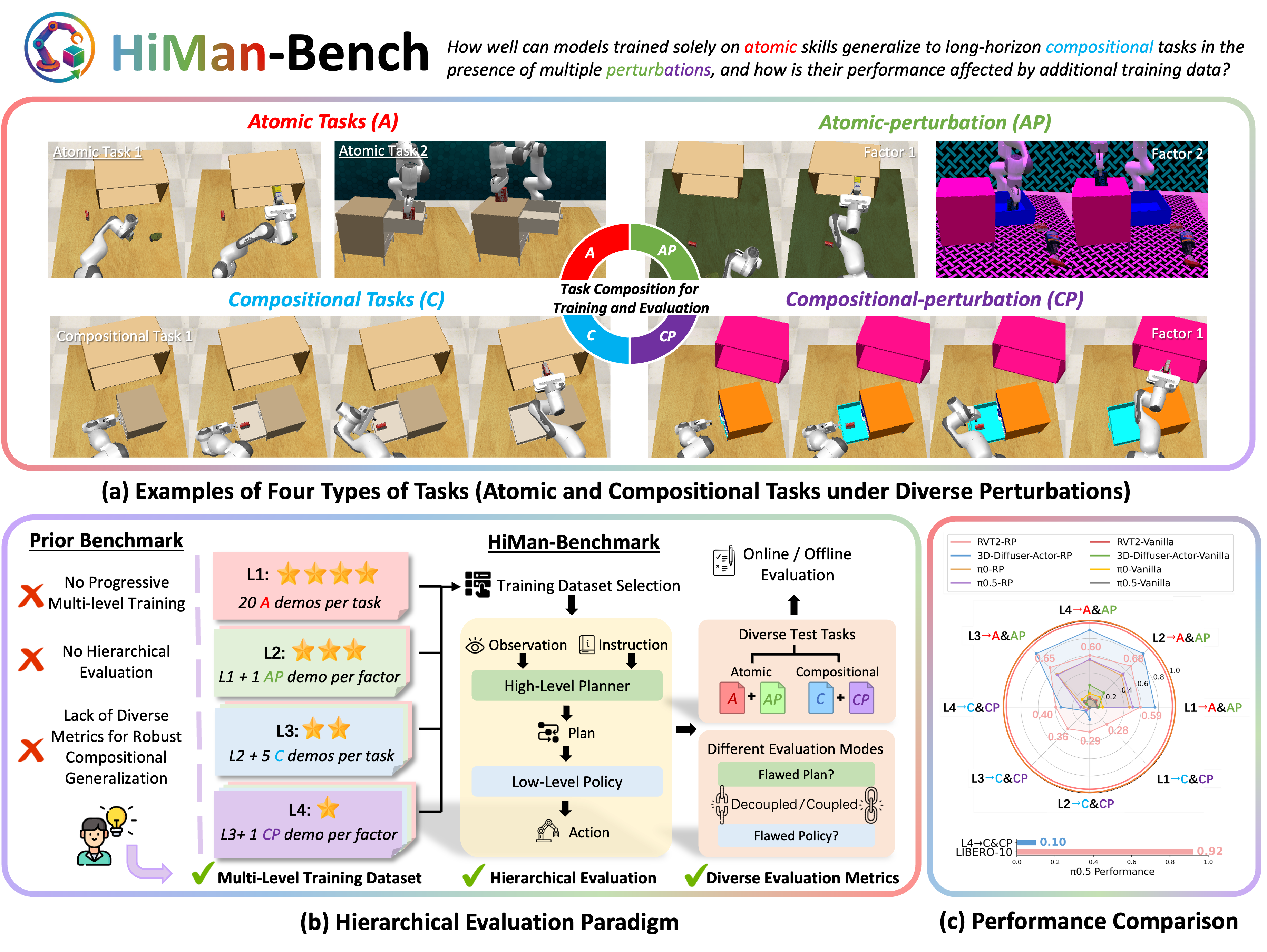

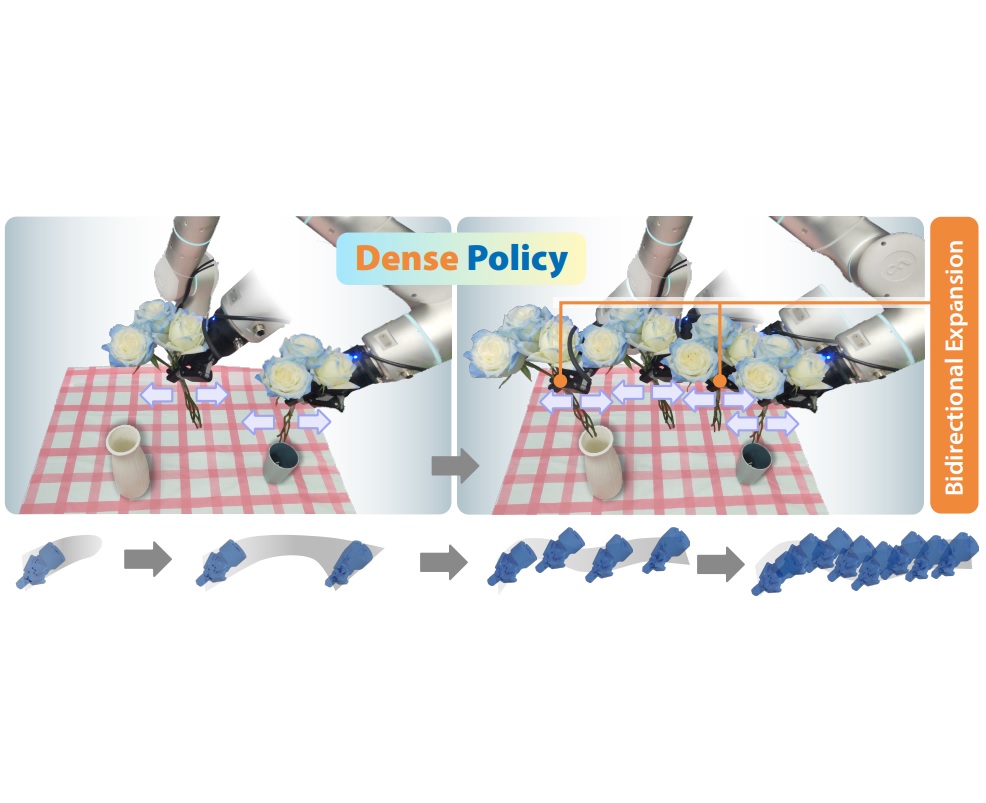

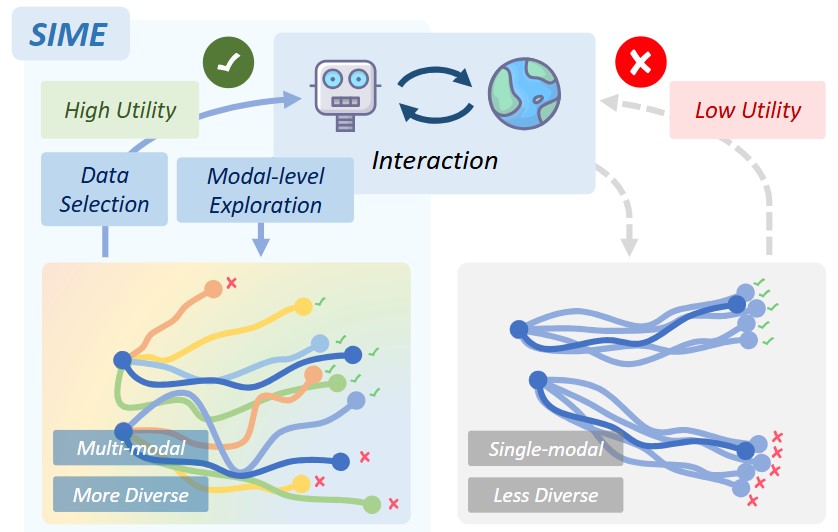

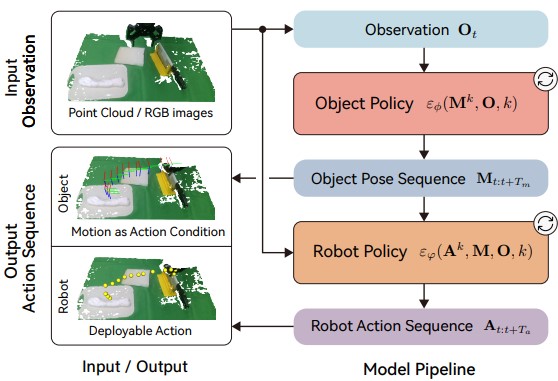

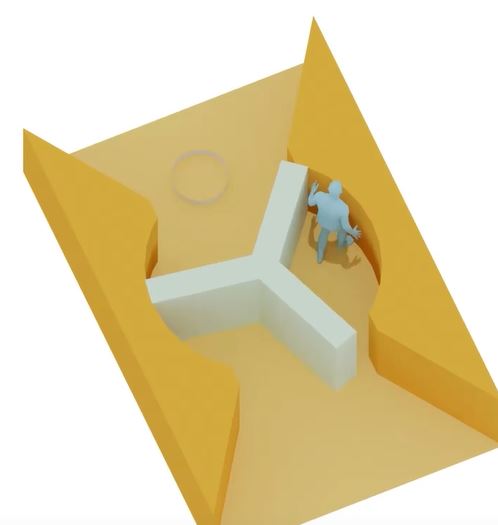

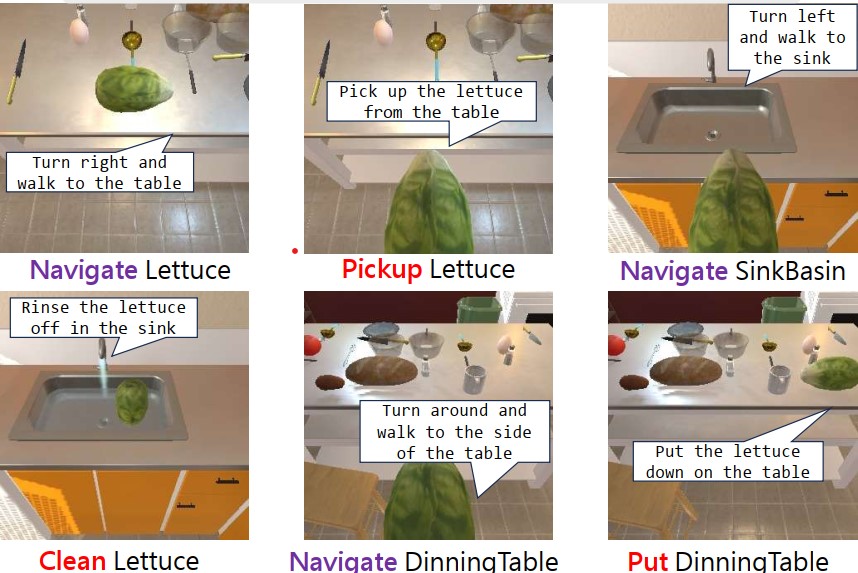

(S) Embodied AI: how to make agents learn skills from humans and interact with the physical world.

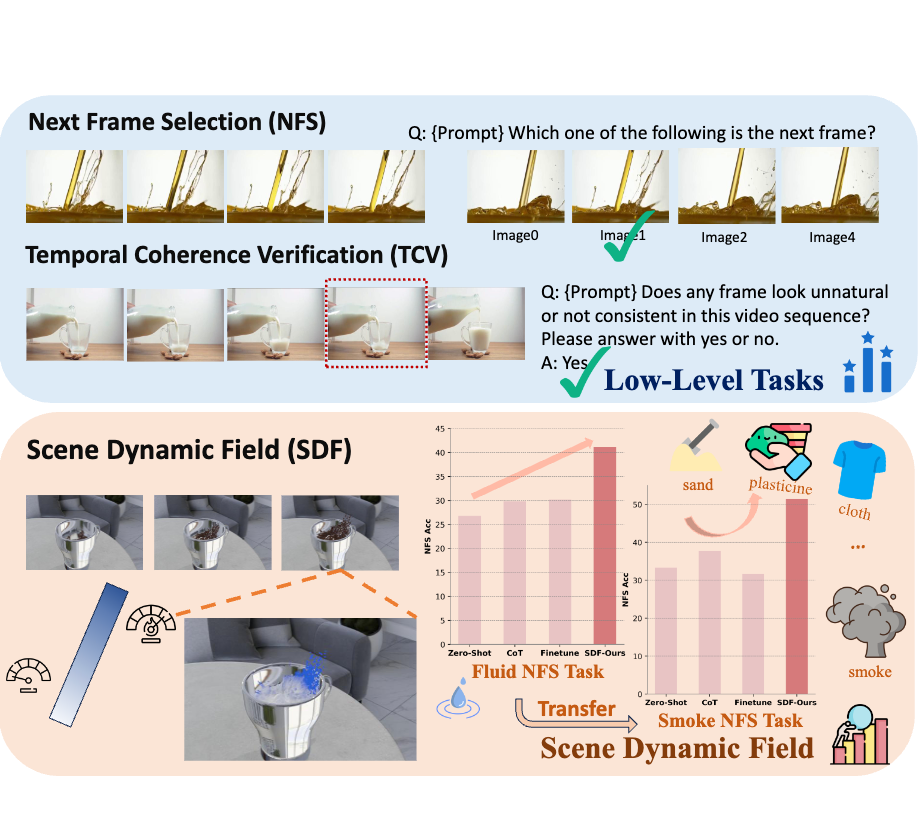

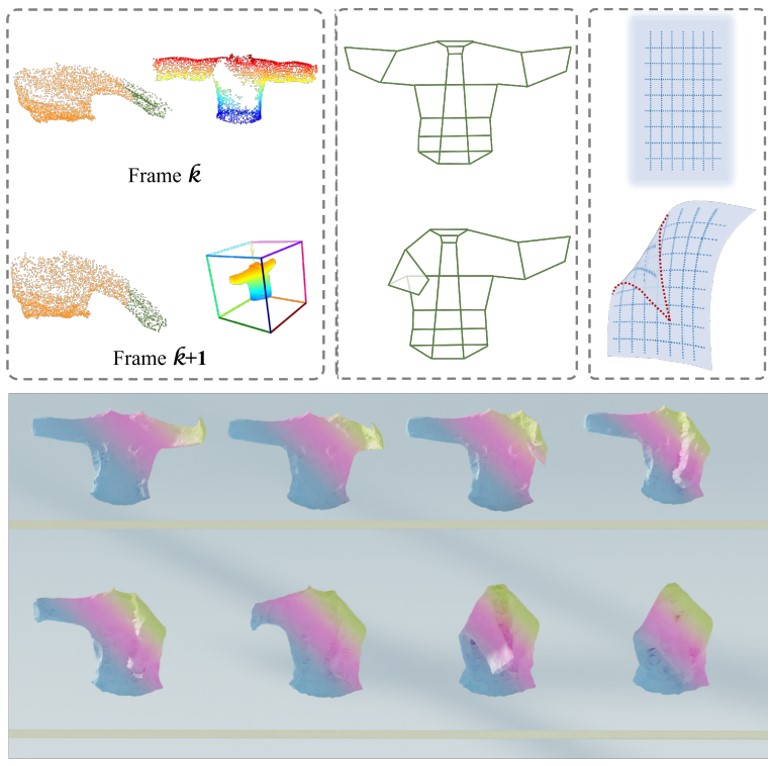

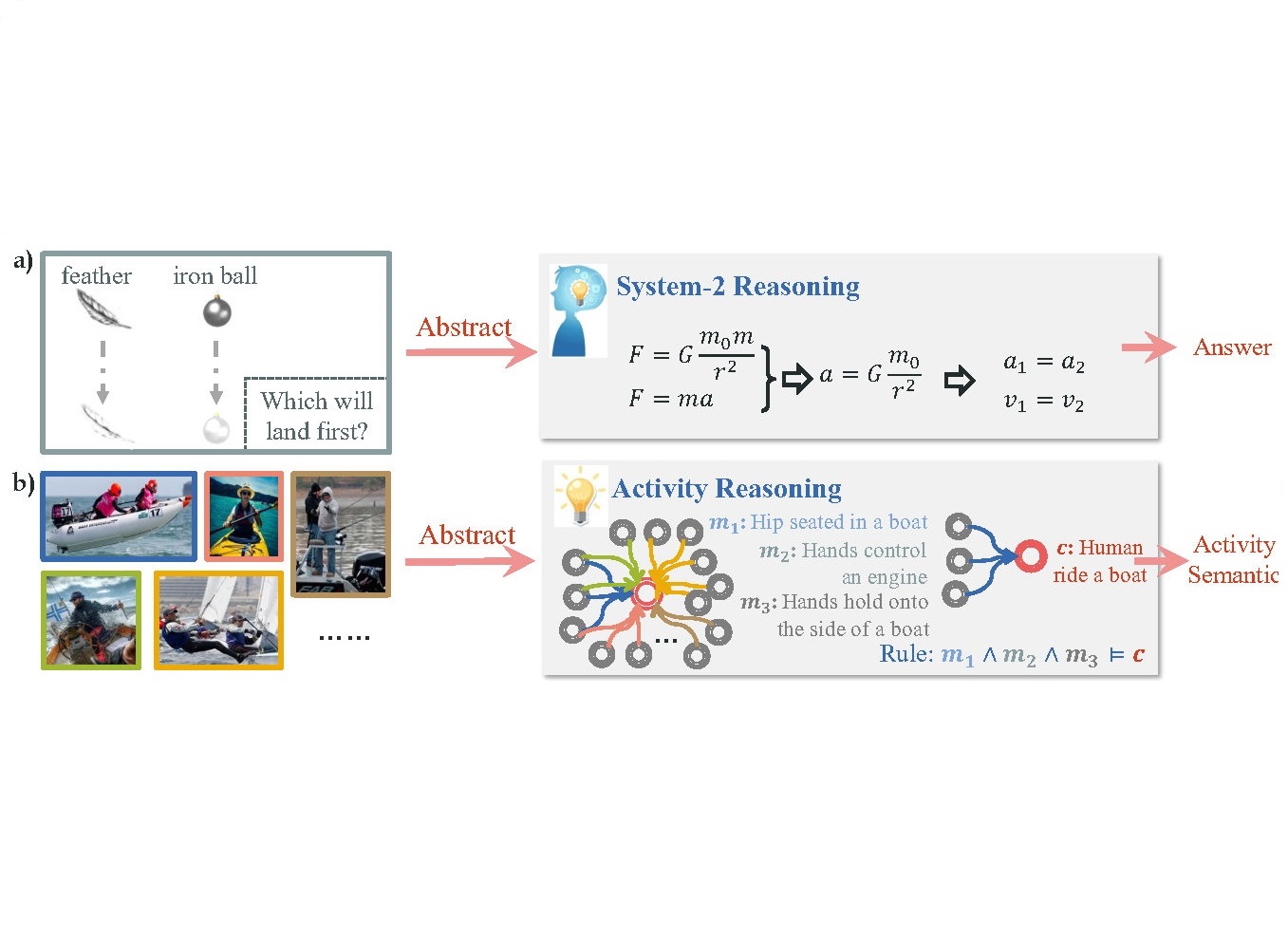

(S-1) Physical Reasoning: how to mine, capture, and embed the logics, causal relations, and laws from physical phenomenons.

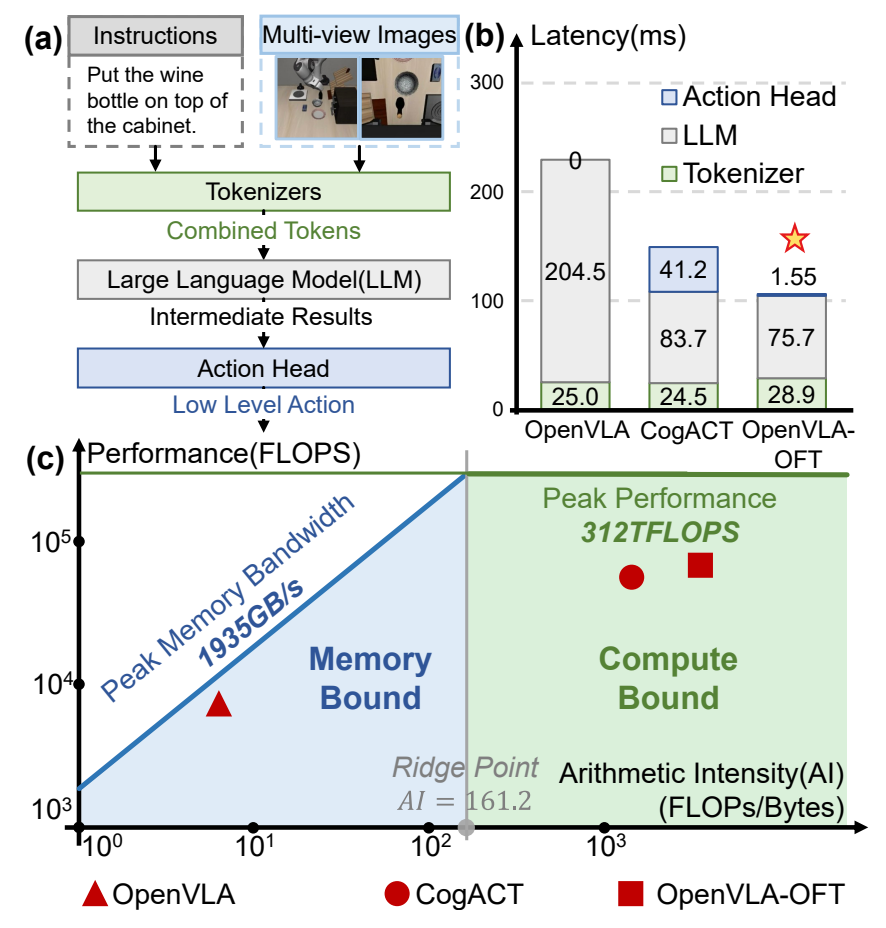

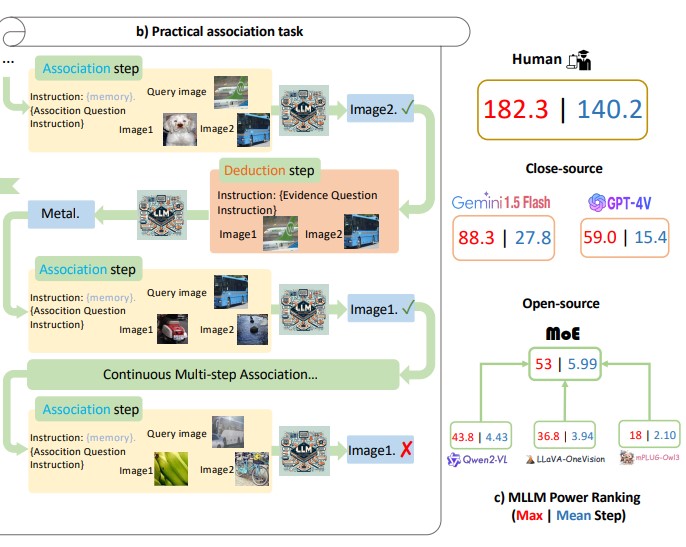

(S-2) General Multi-Modal Foundation Models: MLLM, VLA, pure 3D/4D large models.

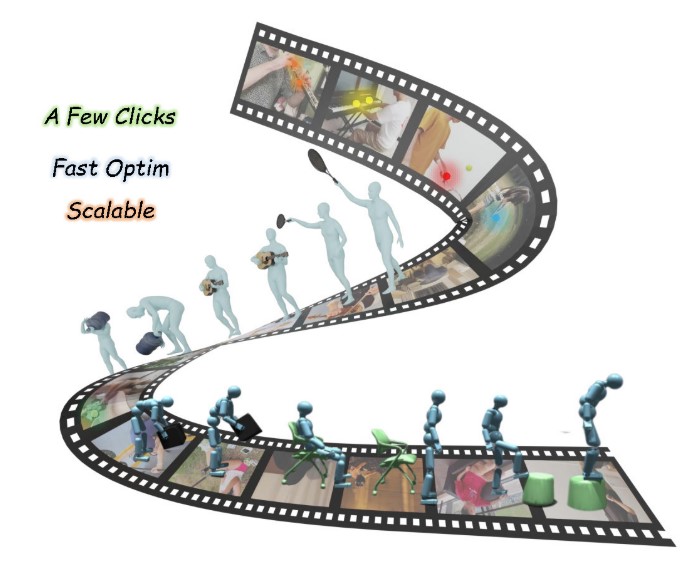

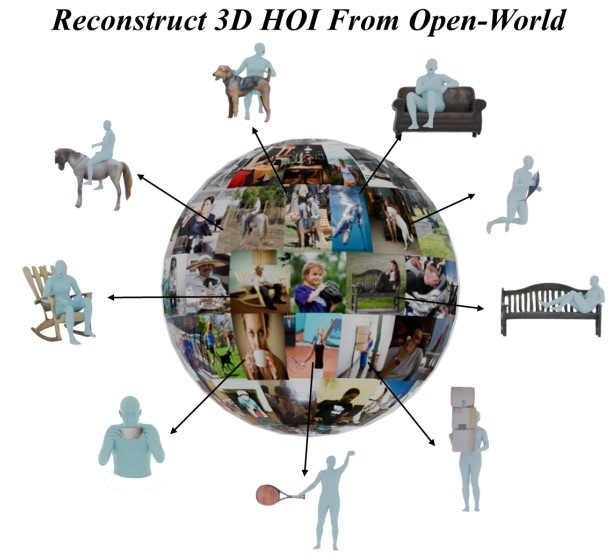

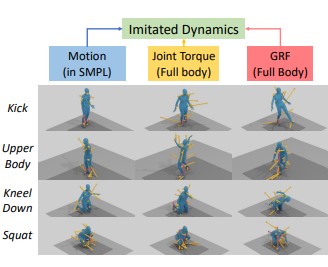

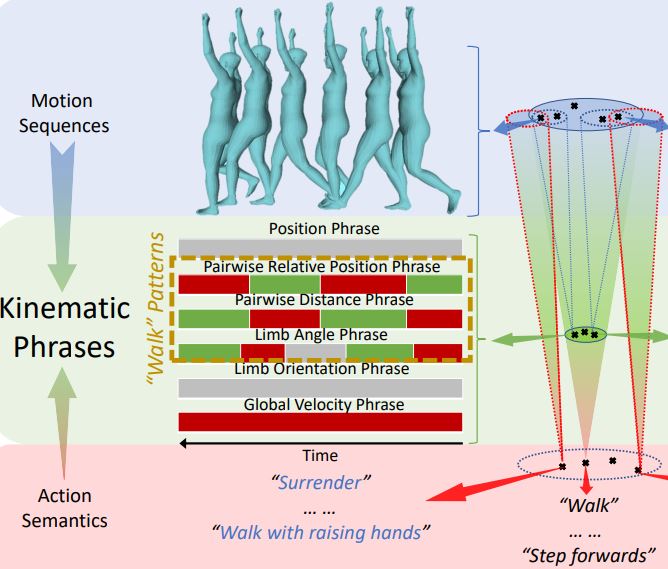

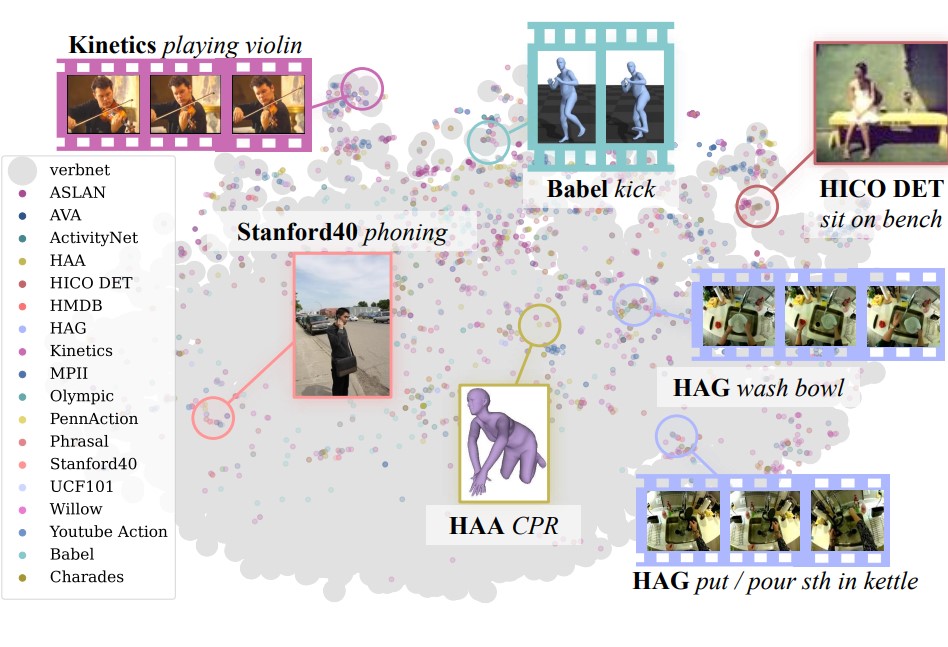

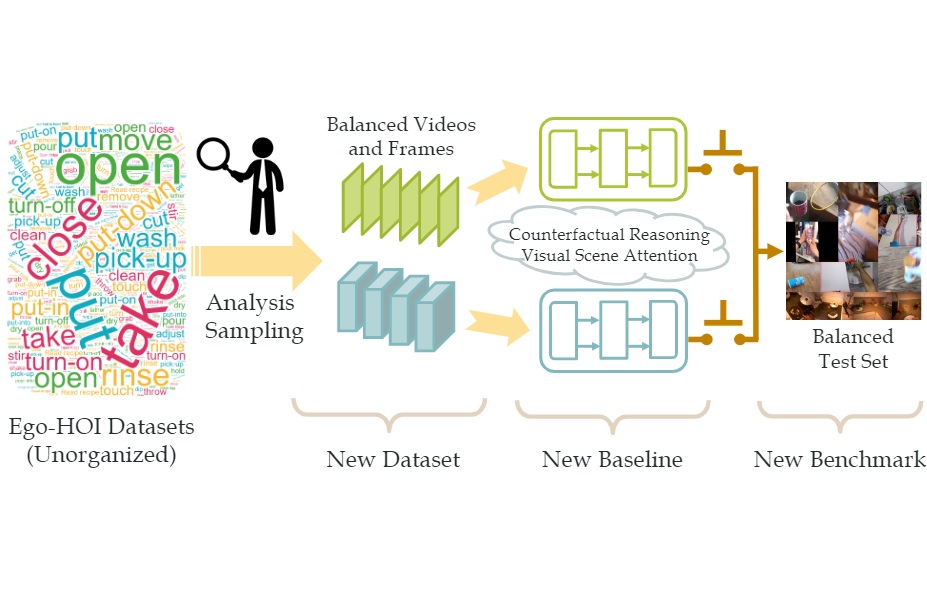

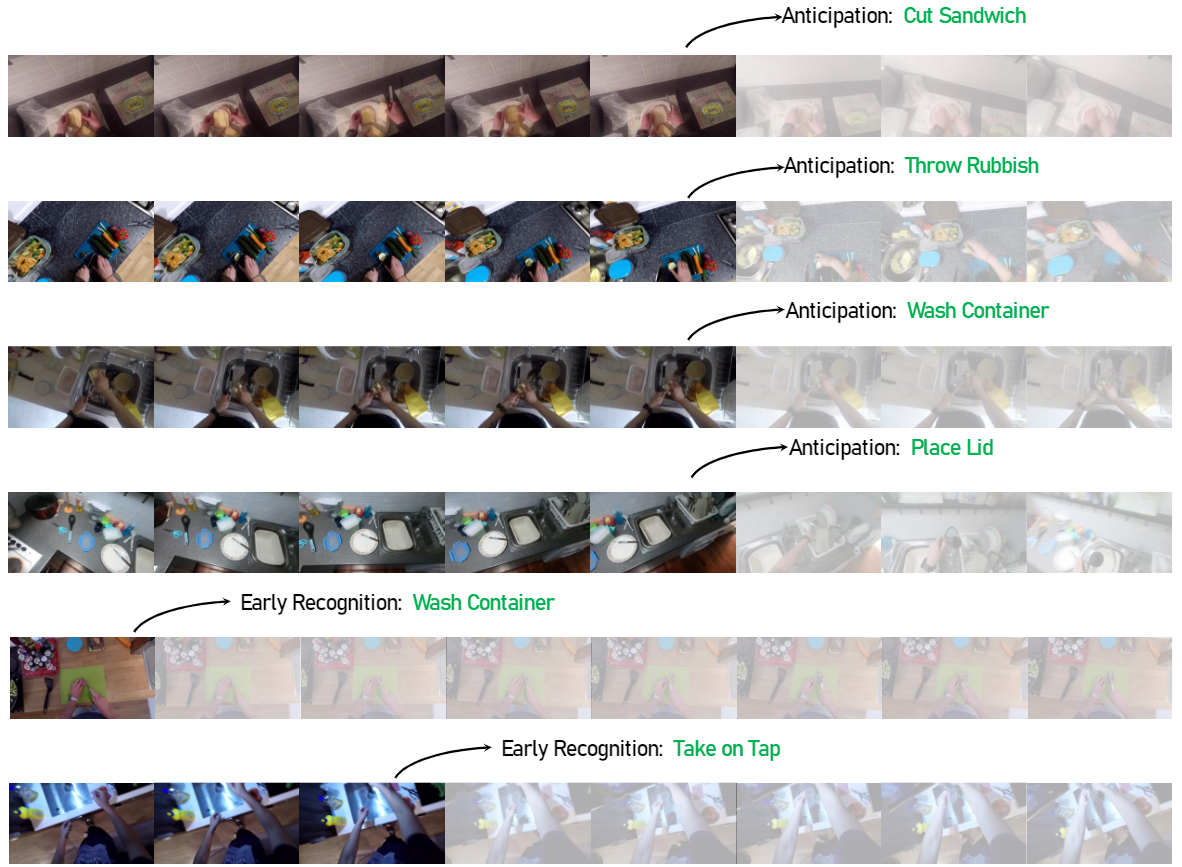

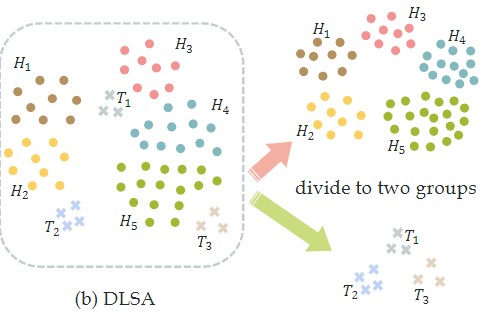

(S-3) Activity Understanding and Generation: how to learn, ground, and generate complex/ambiguous activity concepts (body motion, body-object/body/scene interaction) and object concepts from multi-modal information (2D-3D-4D).

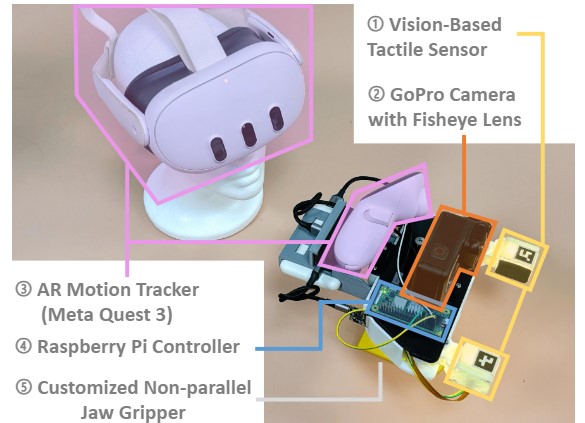

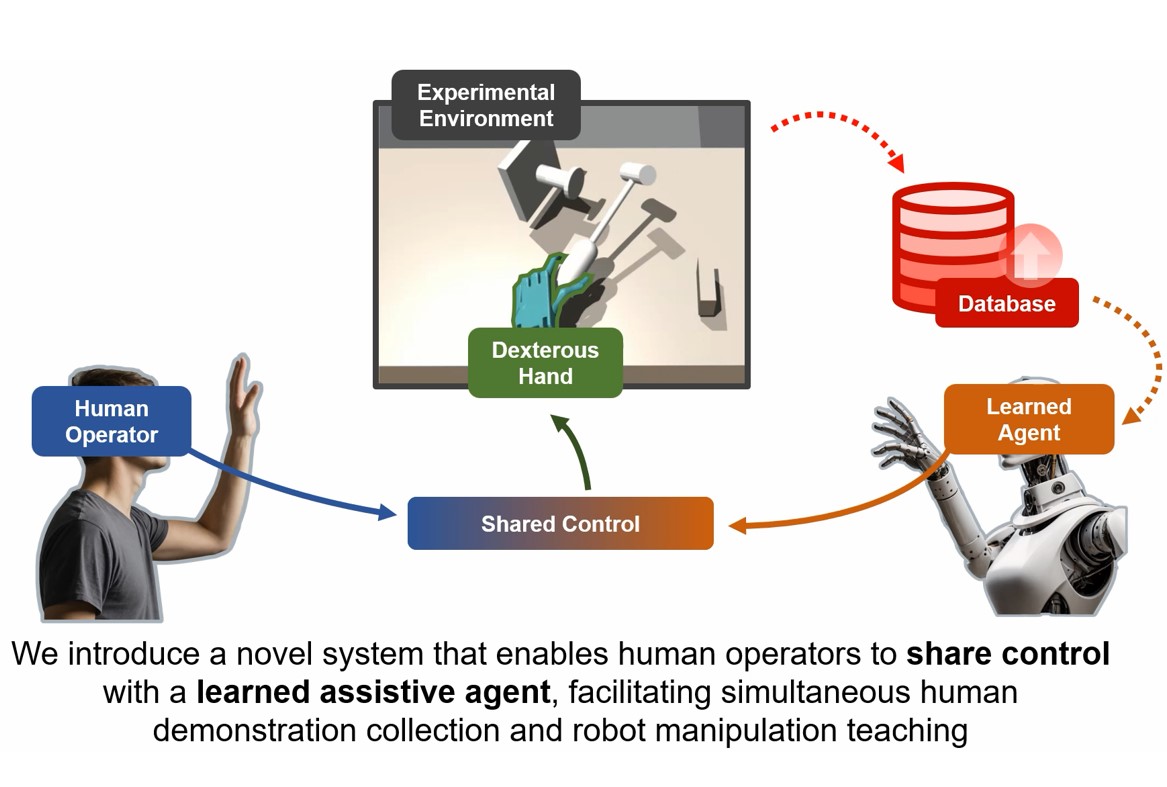

(E) Human-Robot Interaction: Teaching, Joint Learning, Cooperation, Healthcare, etc.

Recruitment: Actively looking for self-motivated students (master/PhD, 2026 spring & fall), interns/engineers/visitors (CV/ML/ROB/NLP background, always welcome) to join us in Machine Vision and Intelligence Group (MVIG). If you share same/similar interests, feel free to drop me an email with your resume. Click Eng or 中 for more details.

arXiv 2026 [arXiv] [PDF] [Project] [Code] [Data & Model]

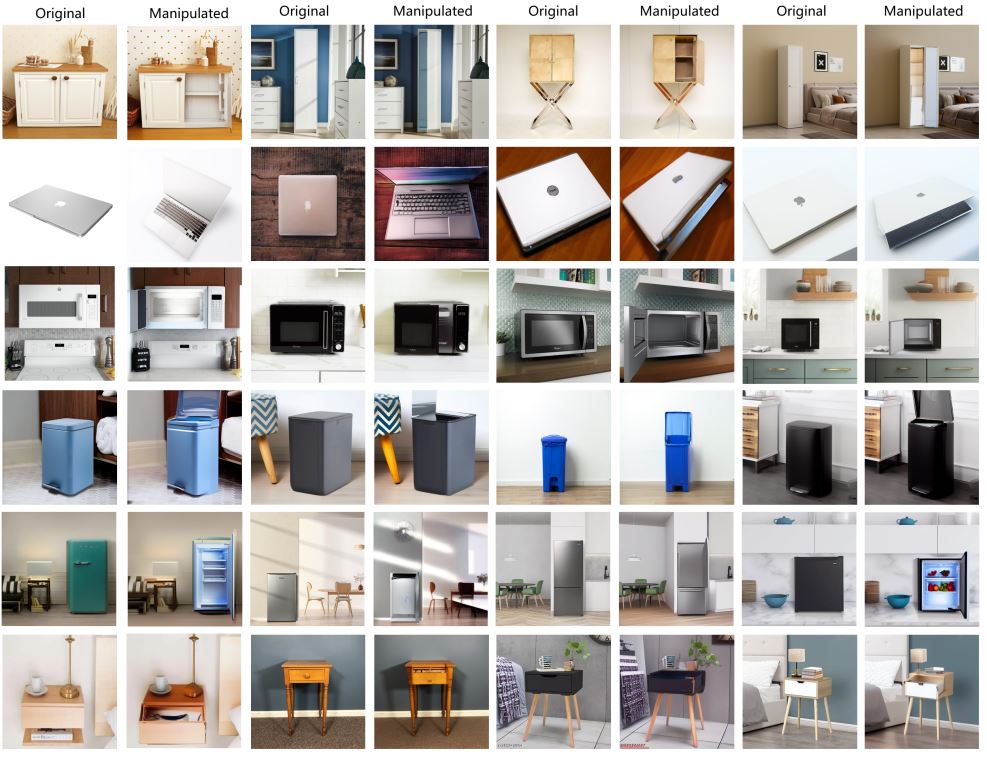

CVPR 2025 [arXiv] [PDF] [Project] [Code] [Annotation Tool]

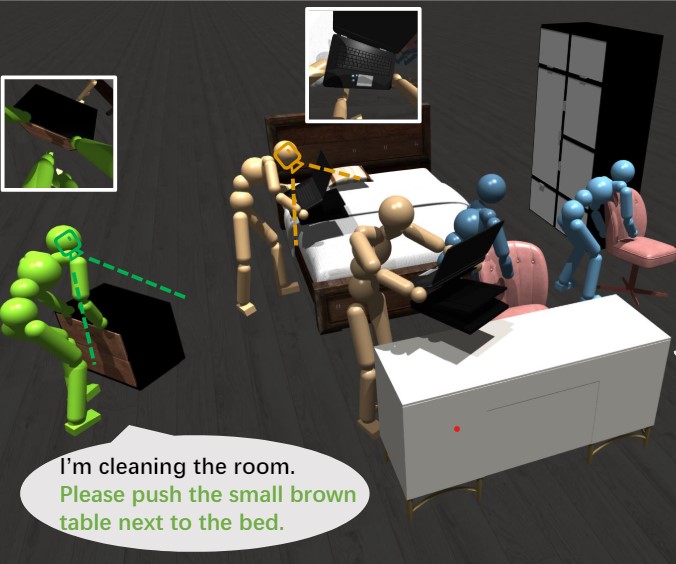

ICRA 2025 Best Paper Award on Human-Robot Interaction [PDF] [Project]

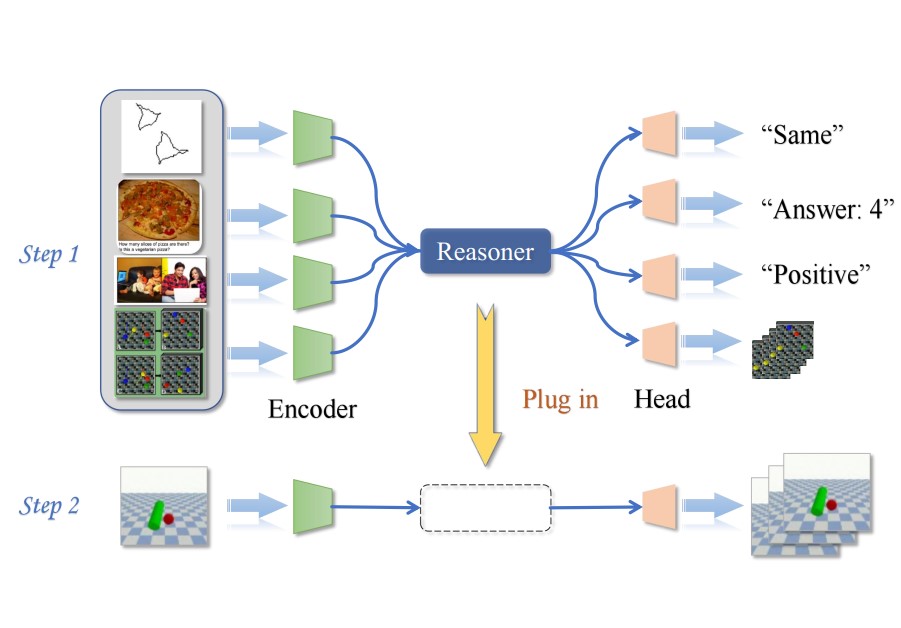

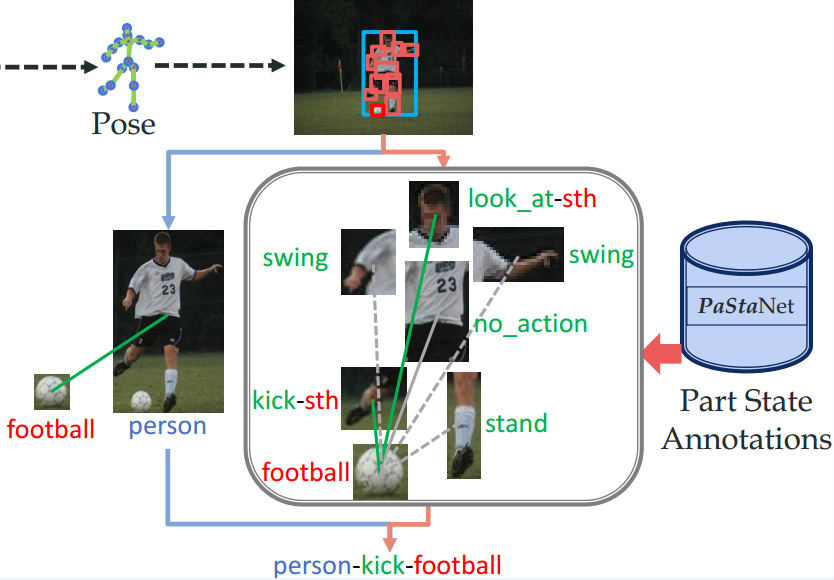

Tech Report A part of the HAKE Project [arXiv] [PDF] [Code & Data]

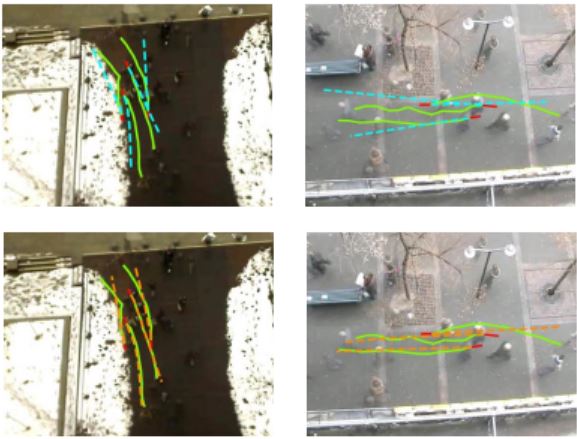

Frontiers in Behavioral Neuroscience - Individual and Social Behaviors 2023 [arXiv] [PDF] [Project]

CVPR 2022 [PDF]

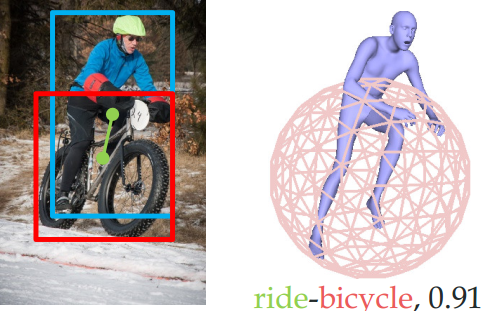

TPAMI 2022 [arXiv] [PDF] [Code]

An extension of our CVPR 2019 work (Transferable Interactiveness Network, TIN).

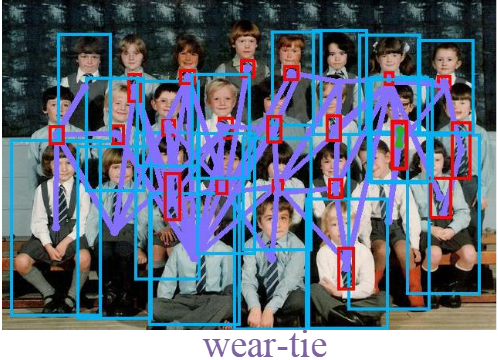

NeurIPS 2020 [arXiv] [PDF] [Code] [Project: HAKE-Action-Torch]

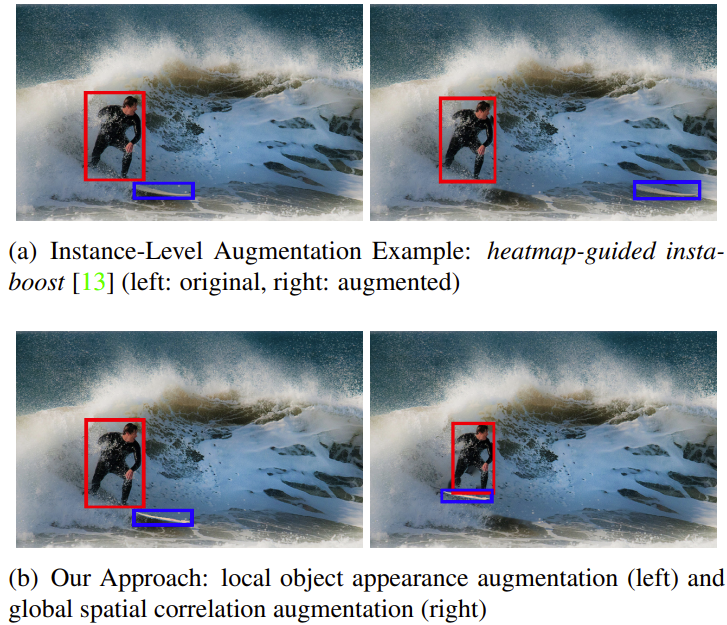

CVPR 2020 [arXiv] [PDF] [Video] [Slides] [Data] [Code]

Oral Talk, Compositionality in Computer Vision in CVPR 2020.

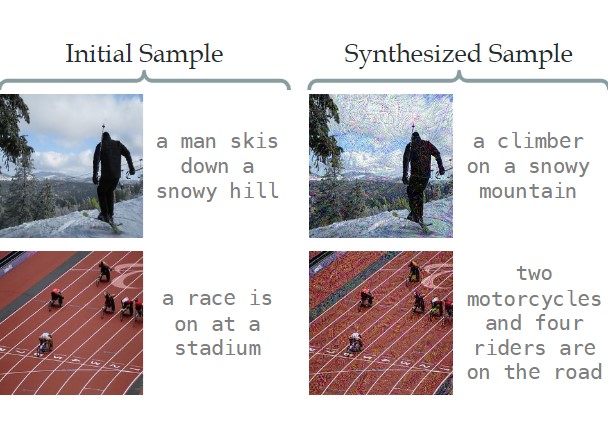

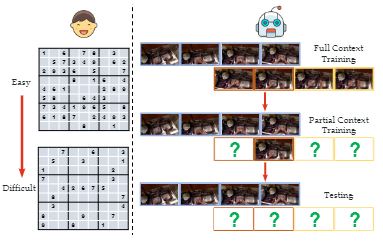

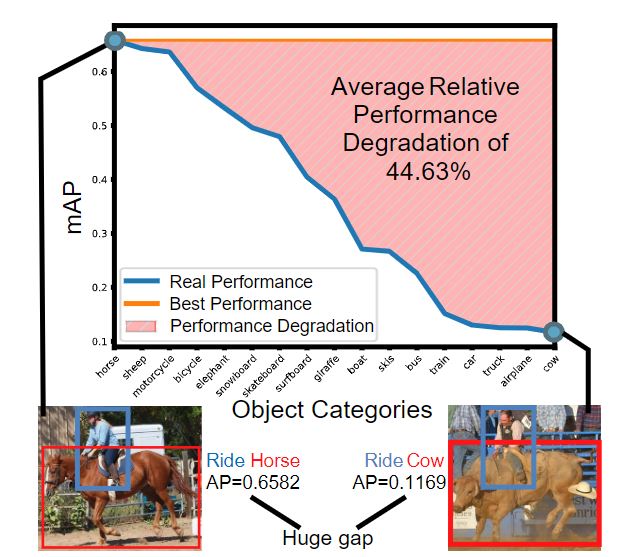

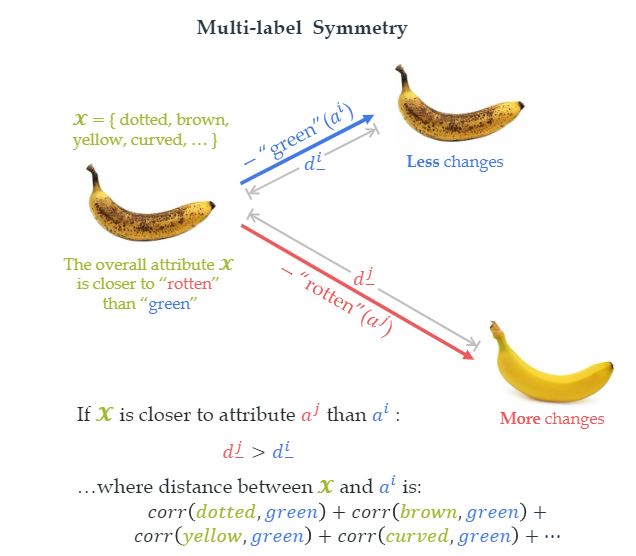

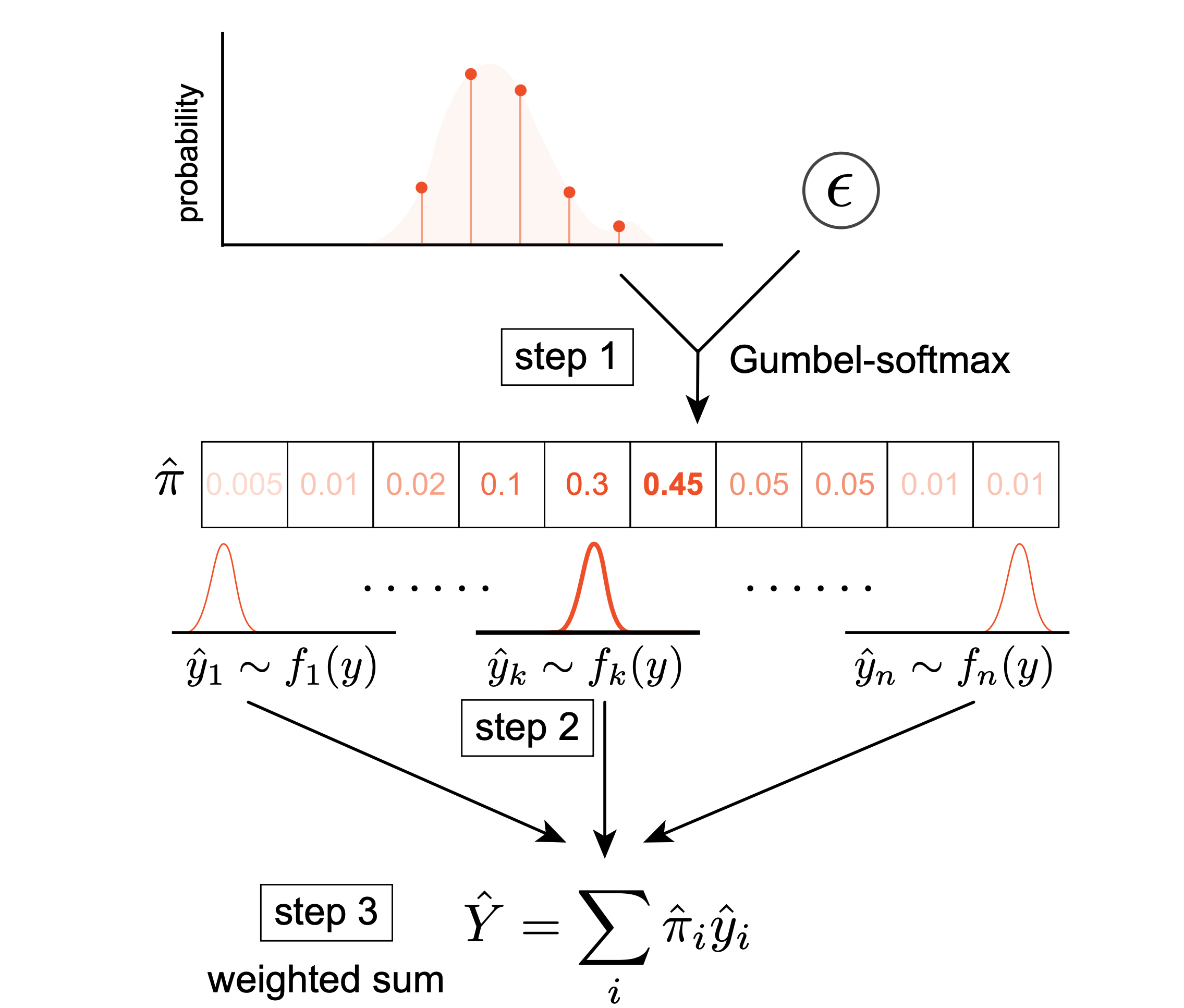

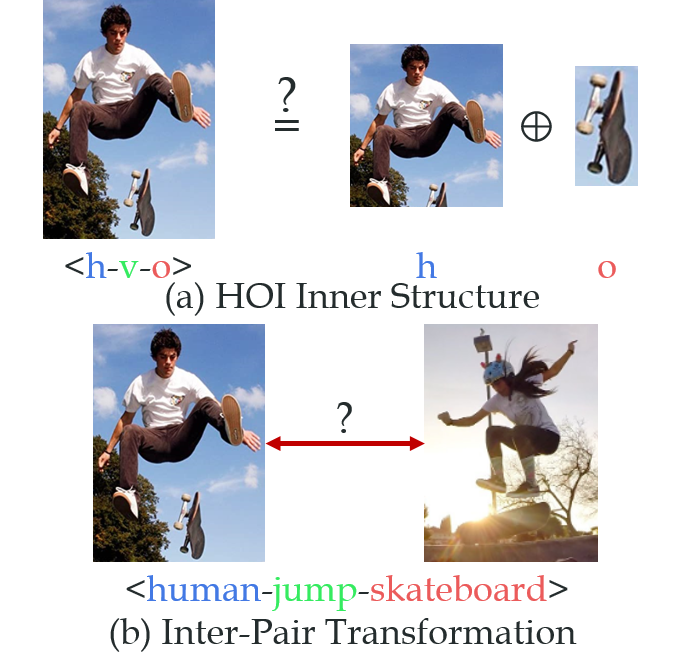

CVPR 2020 [arXiv] [PDF] [Video] [Slides] [Benchmark: Ambiguous-HOI] [Code]

Tech Report HAKE 1.0 [arXiv] [PDF] [Project] [Code]

Main Repo:

Sub-repos: Torch TF HAKE-AVA Halpe List

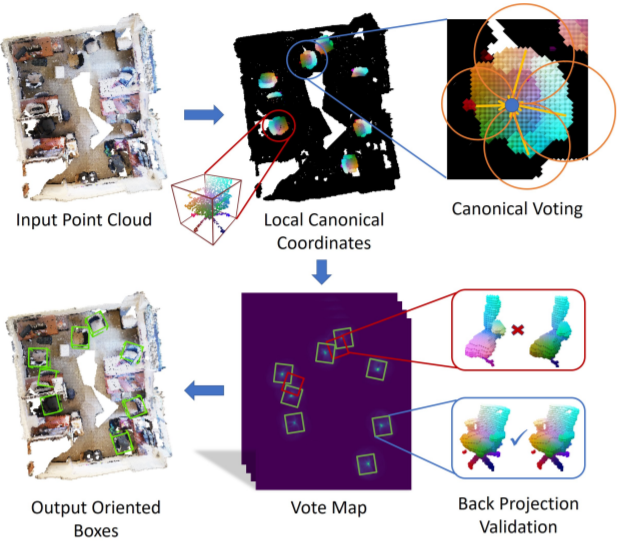

ECCV 2018 [arXiv] [PDF] [Dataset](Instance-60k & 3D Object Models) [Code]

CVPR 2018 [PDF]

ICPR 2016 [PDF]

Contents:

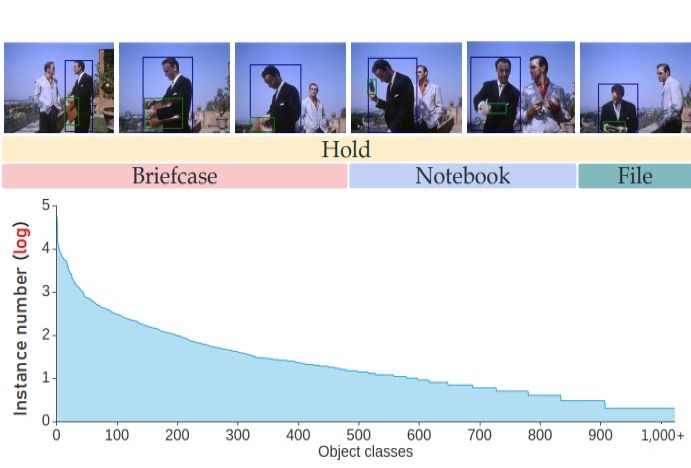

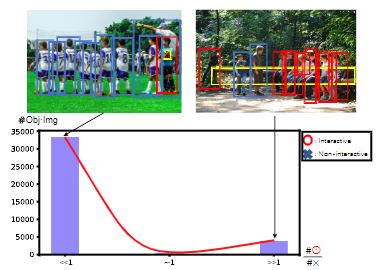

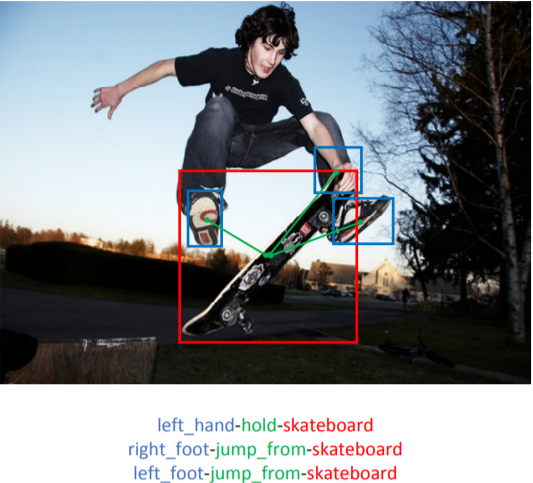

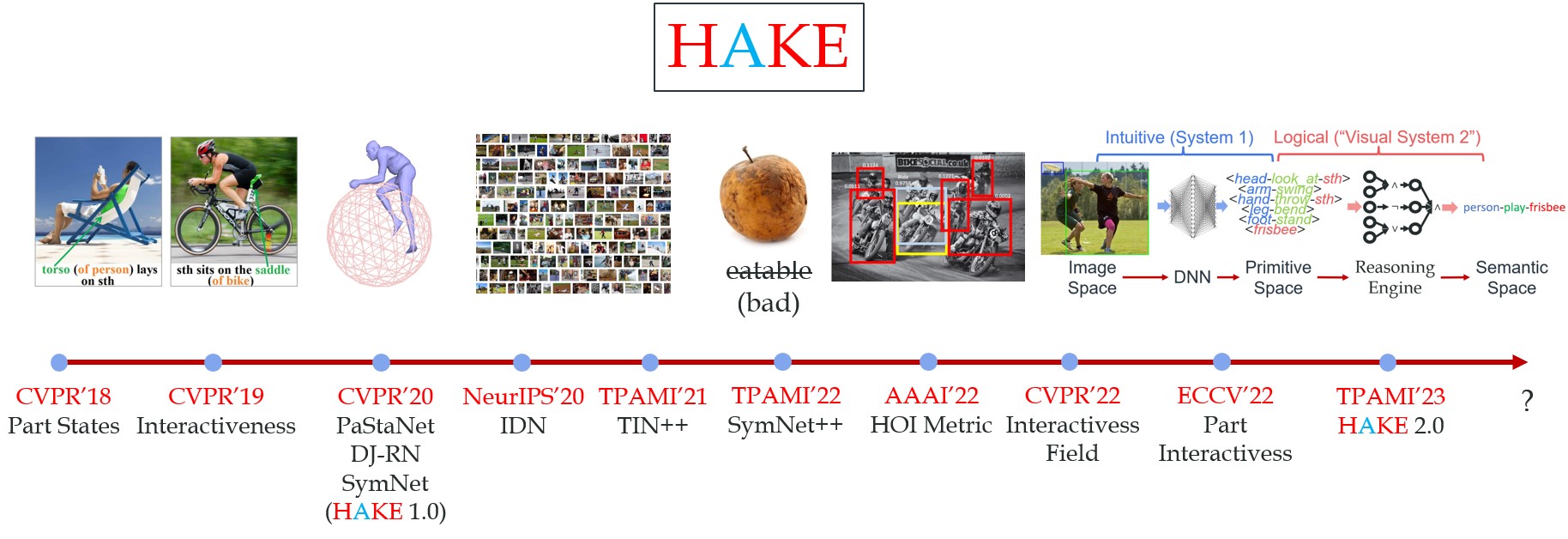

1) HAKE-Image (CVPR'18/20): Human body part state (PaSta) labels in images. HAKE-HICO, HAKE-HICO-DET, HAKE-Large, Extra-40-verbs.

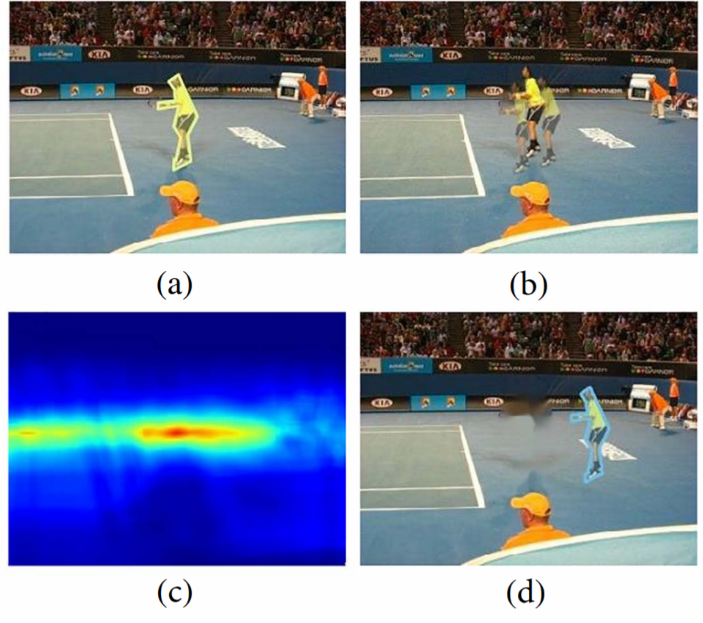

2) HAKE-AVA: Human body part state (PaSta) labels in videos from AVA dataset. HAKE-AVA.

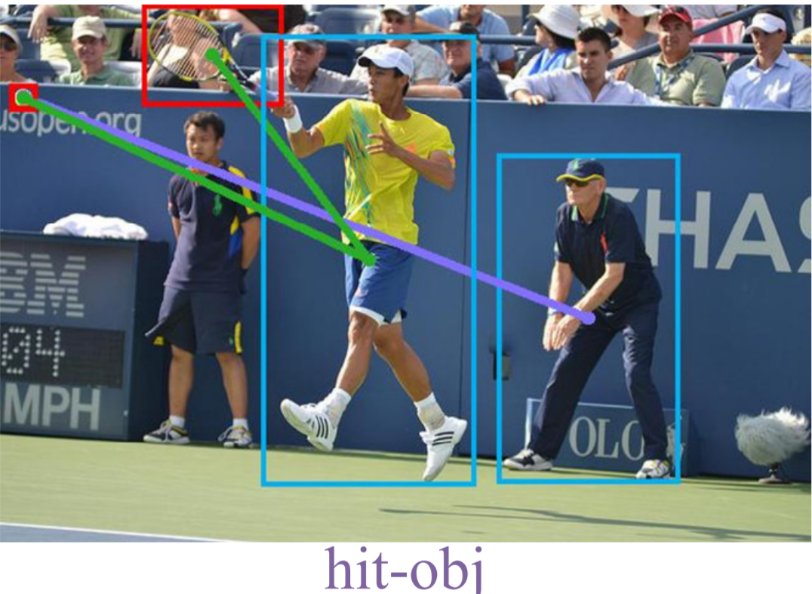

3) HAKE-Action-TF, HAKE-Action-Torch (CVPR'18/19/22, NeurIPS'20, TPAMI'22/23, ECCV'22, AAAI'22): SOTA action understanding methods and the corresponding HAKE-enhanced versions (TIN, IDN, IF, ParMap).

4) HAKE-3D (CVPR'20): 3D human-object representation for action understanding (DJ-RN).

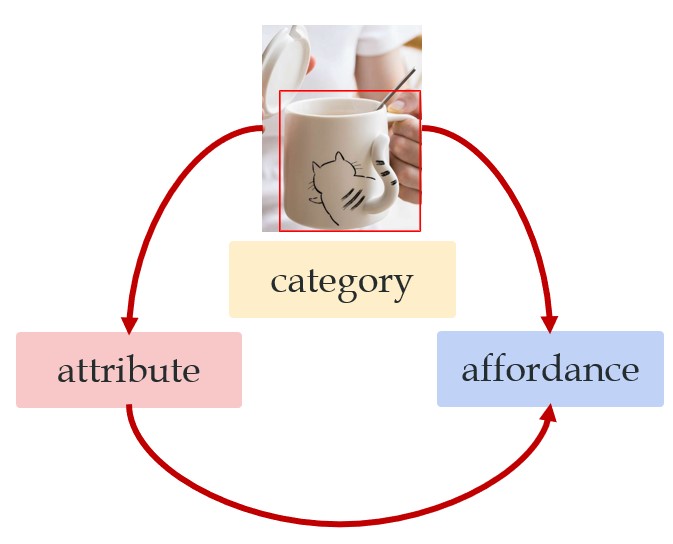

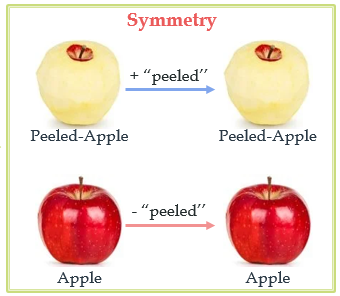

5) HAKE-Object (CVPR'20, TPAMI'21): object knowledge learner to advance action understanding (SymNet).

6) HAKE-A2V (CVPR'20): Activity2Vec, a general activity feature extractor based on HAKE data, converts a human (box) to a fixed-size vector, PaSta and action scores.

7) Halpe: a joint project under Alphapose and HAKE, full-body human keypoints (body, face, hand, 136 points) of 50,000 HOI images.

8) HOI Learning List: a list of recent HOI (Human-Object Interaction) papers, code, datasets and leaderboard on widely-used benchmarks.